Jan/Feb/Mar 2019 travel

30 Apr 2019After the stay in California, I didn’t do a load of travel in the first three months of 2019, mostly due to being staffed in Chicago & spending some time there. Also, there isn’t a lot of easily accessible hiking in winter!

This post covers what I was up to for the first three months of the year, and is mostly about Chicago.

Chicago

Early weather

Chicago in winter is a level of cold that I’m not well acquainted with 🥶

I drove up from Cincinnati, and was blessed with a snow storm the night before my drive:

I guess it was a sign of things to come - a fortnight later was the 2019 polar vortex, which shut down most of the city for a couple of days. It was the only time I saw the river completely frozen over:

I was staying a very short distance from work and decided to walk during the cold weather, which was an experience. My face was completely covered, except for my eyes, which watered. Of course the water from my struggling eyes froze, along with the tiny bit of hair that was exposed and water condensing in my nose (producing a satisfying crunch when I pinched my nostrils).

Field Museum

I went full tourist in Chicago and purchased a CityPASS, good for entry into 5 attractions. The first I visited was the Field Museum. For some reason I don’t have loads of photos here, but the main things I remember are

- the huge collection of taxidermied animals

- SUE the T. rex

- the Underground Adventure

Art Institute of Chicago

I’m not normally one for art museums, but The Art Institute has a great collection, including a number of paintings by van Gogh, Picasso, Pollock, Warhol and more. The Deering Family Galleries of Medieval and Renaissance Art, Arms, and Armor (an ongoing exhibit) was another highlight, and I was amazed at the detail in the 68 Thorne Miniature Rooms.

Skydeck Chicago

I’ve been told by locals that under no circumstances am I to refer to the Sears Tower by it’s new name, The Willis Tower. Regardless of the name, the views from the The Skydeck are spectacular:

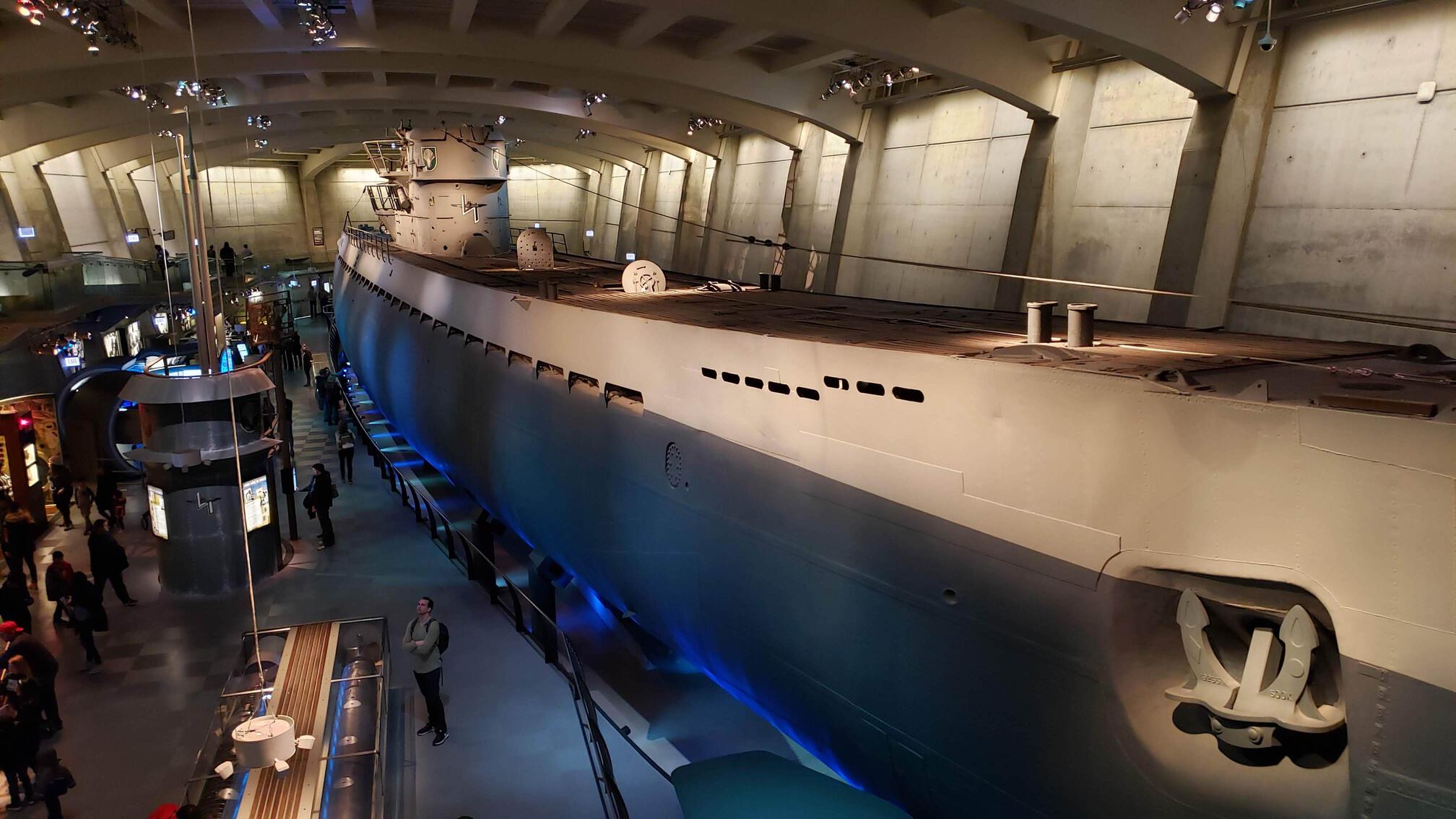

Museum of Science and Industry

I think the Museum of Science and Industry in Chicago is my new favourite museum, with the Deutsches Museum in Munich a close second.

It’s absolutely huge, I could easily spend far more than a day there. A few highlights, in no particular order:

The mirror maze was oddly peaceful for the few seconds I was in there alone! For the rest of the time I was just trying to avoid running into the kids that were barrelling though.

St Patrick’s Day

St Patrick’s Day in Chicago was an experience … I knew it was a huge event, but I didn’t expect the streets to be packed from 7:30 AM.

… Spring?

I’ve been warned that March warm weather in Chicago only lulls you into a false sense of security. As I’m writing this it’s closer to the end of April & I’m hopeful the worst of the cold weather is finished. As a reference, here’s a recent photo of Maggie Daley Park & Grant Park - it looks a little more inviting than the photo earlier in this post!

LA & Spartan Race

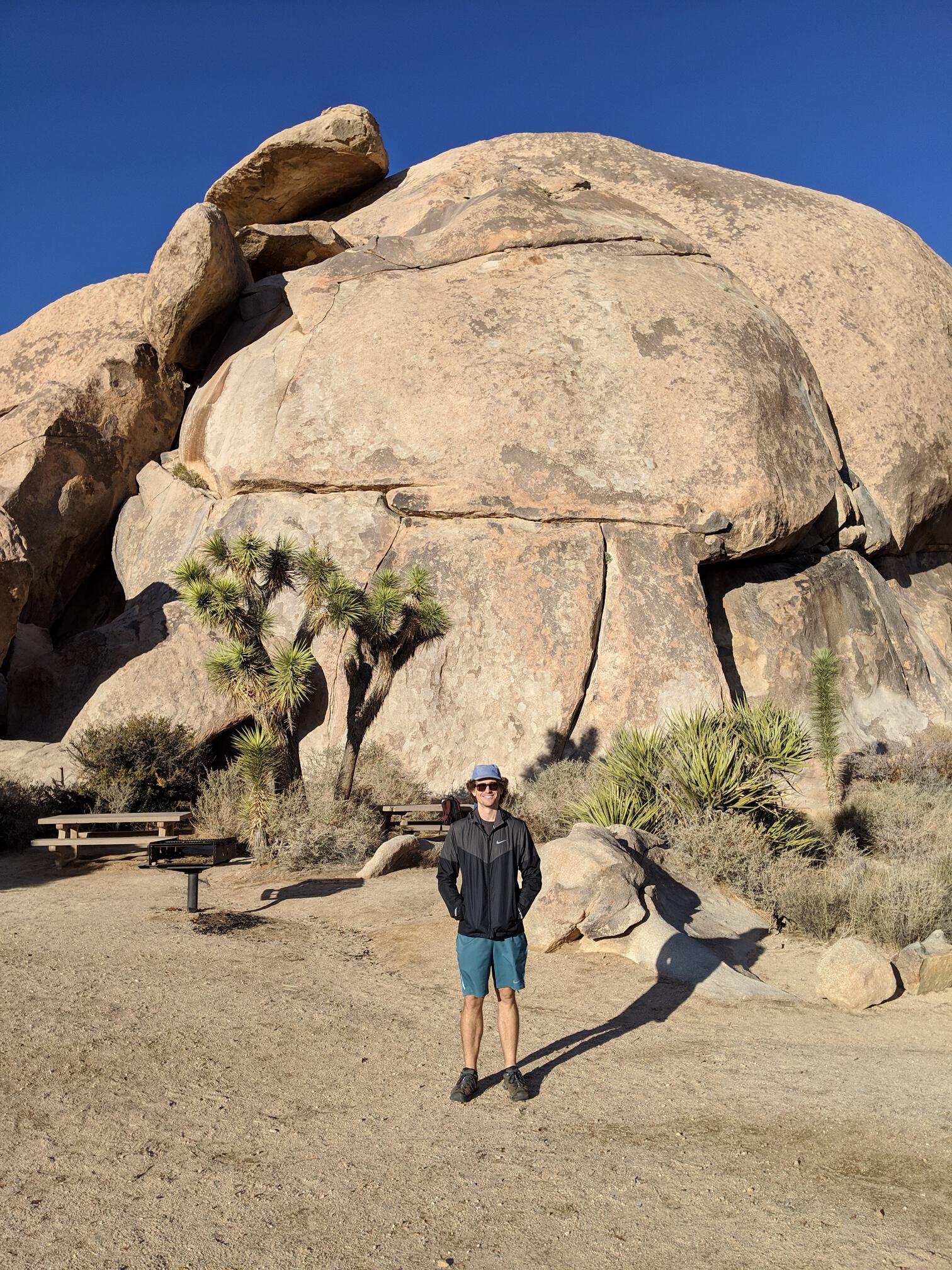

I visited LA briefly in December on a trip to go hiking in Joshua Tree National Park, but most of the time in LA itself was spent sitting in traffic.

I went back in January to do the Spartan SoCal Sprint just outside of LA, and had a chance to see a little more of LA itself.

Griffith Observatory is definitely worth a visit, for the exhibits as well as the views of LA.

On Saturday we spent some time in Venice Beach area, including the Venice Canals and a ride along the boardwalk and ocean front walk. It was a nice change from the winter in Chicago!

Seattle

I had planned a trip to Chicago the weekend after the polar vortex hit Chicago, but my flight out was cancelled due to the weather. I still made it out of Chicago the next day, but that made for a fairly short trip, so I changed my plans to spend the time in Seattle itself and ditched the hiking I was planning in Mt Rainier National Park.

I stayed right near Pike Place Market which turned out to be a great spot.

Some notable places:

- Piroshky Piroshky bakery: I may have returned here more than once, fodder was delicious but not entirely compatible with my desire to be somewhat healthy 🤣

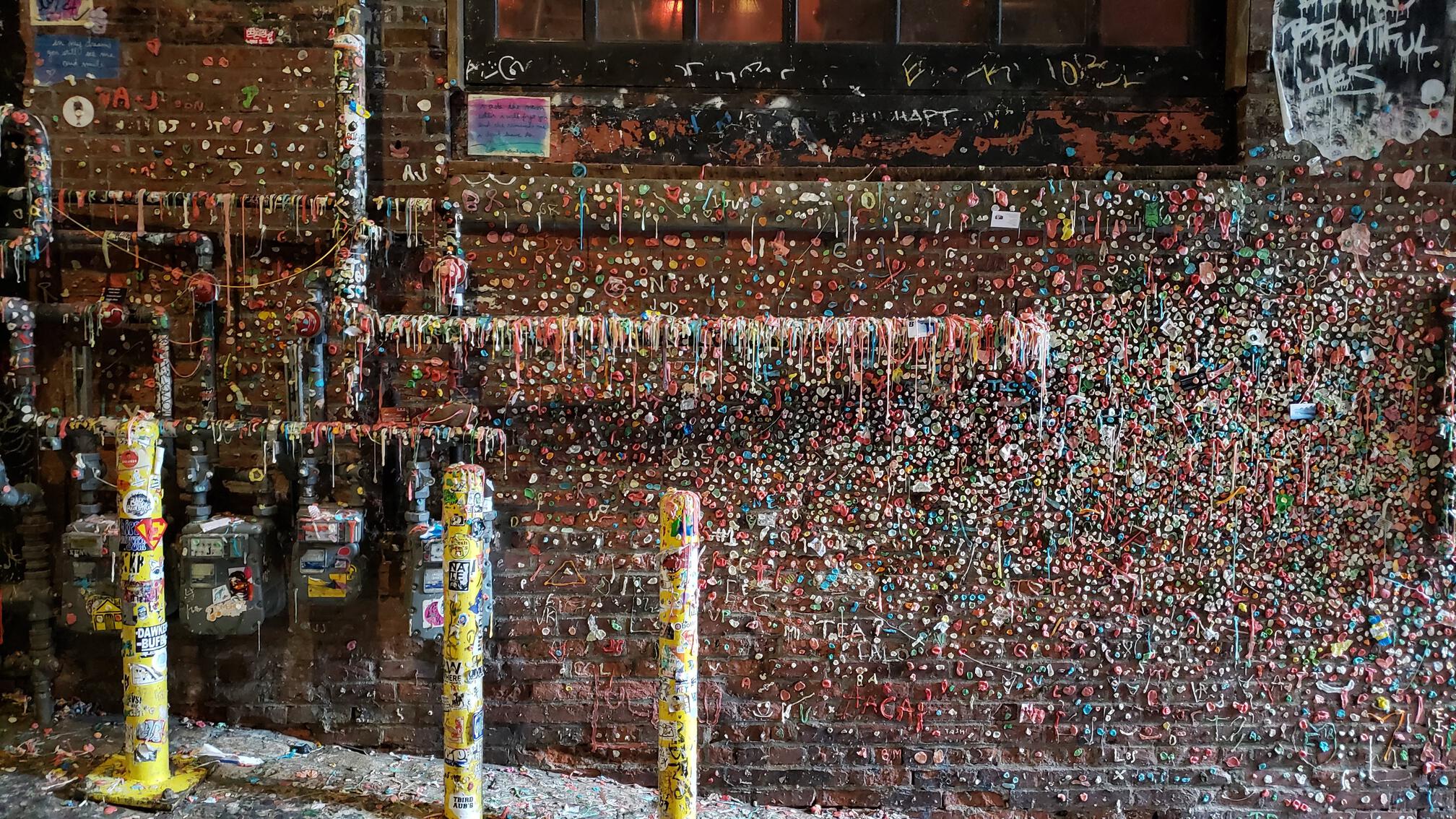

- The Gum Wall: one of the most germ ridden attractions in existence

- The original Starbucks

- Underground tour: it was interesting hearing about how Seattle was built, it is honestly a miracle they didn’t just give up. Wikipedia has some info on the underground.

- Seattle Center: I’d like to go back here, there is loads to do. It was the site of the 1962 World’s Fair. The Space Needle, MoPOP and the Chihuly Garden and Glass are apparently all worth a visit, but I just walked around the area without going in.

South Lake Tahoe

My first trip in March was to South Lake Tahoe for a weekend of skiing. There was a little more snow there than I’ve seen in Australia before 😂.

The base of the resort is at 1,907 m and the top is 3,068 m, so it isn’t super high but its location near the lake means lots of snow (the peak of Mount Kosciuszko is 2,228 m, so most of the Thredbo resort in Australia is less than 2,000 m).

Toronto

The second trip for March was to Toronto, again a short trip so I really only had time to explore part of Old Toronto near where I was staying:

- St. Lawrence Market

- Distillery District

- Yonge-Dundas Square

- CF Toronto Eaton Centre

- Kensington Market